How It Works

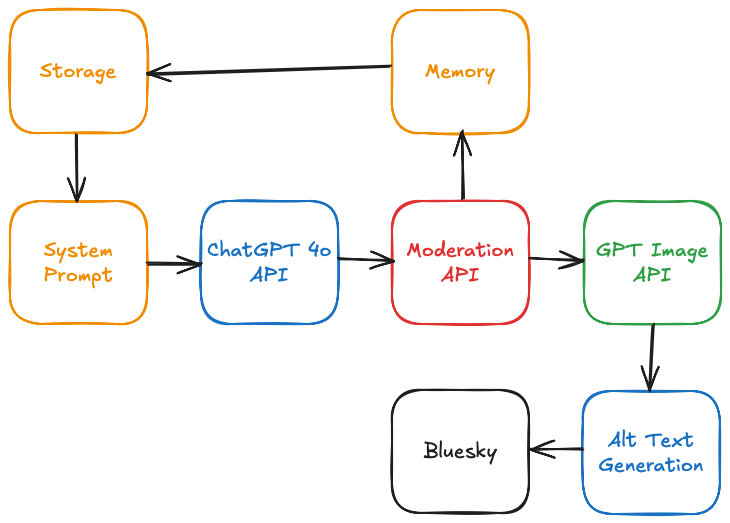

The process the bot takes to generate the art has become more and more complicated as time has gone on. It is now a multi-step process:

The process starts with data that has been stored from previous runs. That information is used to discourage the LLM from producing the same thing over and over again, which was an issue I encountered early on. Once the system prompt is constructed, it is submitted to the API with a request that it come up with a prompt for today's art.

Because I'm pedantic and paranoid, it then sends that result through the OpenAI Moderation API to validate it isn't offensive and the model hasn't gone crazy. The output of this step is "the prompt" and it will be re-used several times in the remaining steps of the process.

The prompt is sent back into the LLM to construct a memory of today's art for storage and use in future runs. The prompt is then sent to GPT Image to actually produce the art. The returned graphic is sent to the GPT Vision API to produce "alt text" and all of that is bundled together into a post that is submitted to Bluesky.